Let’s start with a theory.

In 2003, Clayton Christensen published The Innovator’s Solution, a follow-up to his seminal work — The Innovator’s Dilemma — in which he introduced his most famous idea, disruptive innovation.

In The Innovator’s Solution, Christensen and his co-author, Michael Raynor, attempt to explain how market incumbents can successfully navigate the disruptions introduced by innovative technologies.

The basic version of their idea is that success requires matching your product to the market environment that you’re competing in.

When a new technology comes along, it creates an environment where the best product — the one that most effectively leverages the new technology to deliver the best performance and a tightly integrated experience — wins. However, once the market matures and the new technology becomes more commoditized, the value of having the best product lessens (customers aren’t willing to pay a premium for the best performance when the second-place provider’s performance is nearly the same), and the winners become those with the most open and modular products.

Here’s Christensen explaining how this theory applies to the personal computer market and the changing fortunes of Apple, IBM, Intel, and Microsoft in the 1980s and 1990s:

During the early years, Apple Computer — the most integrated company with a proprietary architecture — made by far the best desktop computers. They were easier to use and crashed much less often than computers of modular construction. Ultimately, when the functionality of desktop machines became good enough, IBM’s modular, open-standard architecture became dominant. Apple’s proprietary architecture, which in the not-good-enough circumstance was a competitive strength, became a competitive liability in the more-than-good-enough circumstance. Apple as a consequence was relegated to niche-player status as the growth explosion in personal computers was captured by the nonintegrated providers of modular machines.

Overall, I find this theory compelling. However, as Ben Thompson points out, Apple is actually a bad example for Christensen to use.

Christensen believed that the same forces of commoditization and modularity that hurt Apple in the personal computer business in the 1980s and 1990s would doom Apple’s iPod business in the 2000s and its iPhone business in the 2010s:

The transition from proprietary architecture to open modular architecture just happens over and over again. It happened in the personal computer. Although it didn’t kill Apple’s computer business, it relegated Apple to the status of a minor player. The iPod is a proprietary integrated product, although that is becoming quite modular. You can download your music from Amazon as easily as you can from iTunes. You also see modularity organized around the Android operating system that is growing much faster than the iPhone. So I worry that modularity will do its work on Apple.

Obviously, that worry turned out to be unfounded, and Ben Thompson’s explanation for Christensen’s mistake is very simple:

Christensen’s theory is based on examples drawn from buying decisions made by businesses, not consumers.

The attribute most valued by consumers … is ease-of-use. It’s not the only one … but all things being equal, consumers prefer a superior user experience.

The business buyer, famously, does not care about the user experience. They are not the user, and so items that change how a product feels or that eliminate small annoyances simply don’t make it into their rational decision-making process.

Christensen’s research is tilted towards business buyers. Critically, this includes the PC. For most of its history, the vast majority of PC purchasers have been businesses, who have bought PCs on speeds, feeds, and ultimately, price.

Consumer buyers and business buyers are fundamentally different. They value different things.

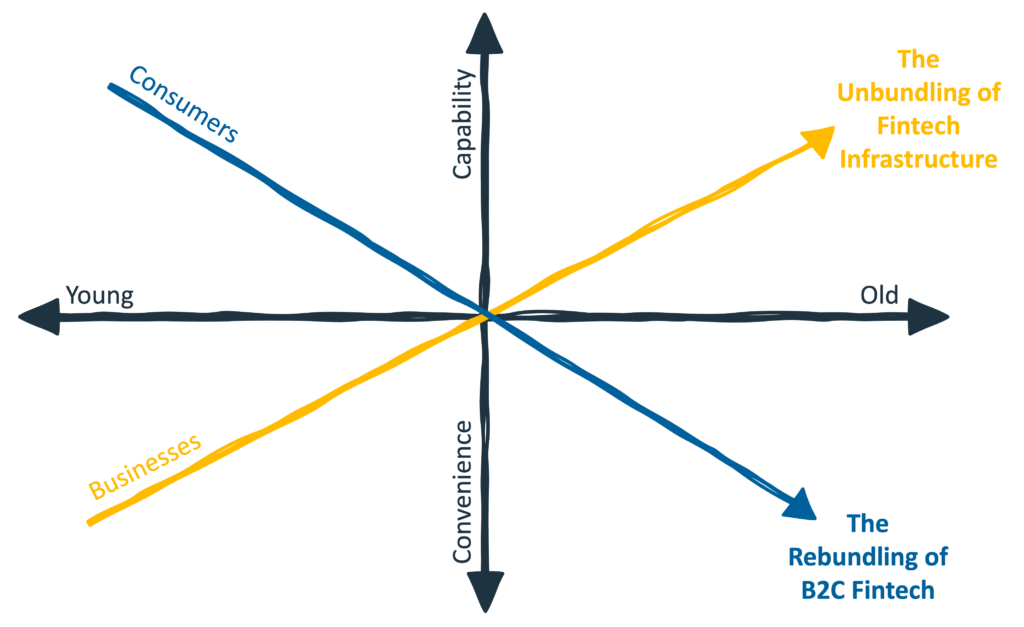

This insight, when combined with Christensen’s theories on disruption and the progression from integration to modularization in maturing markets, leads me to my own theory of disruption in financial services.

My theory has five pillars:

1.) When a disruptive new technology emerges, it creates a competitive environment in which the best product wins. However, the definition of “best product” differs for consumers and businesses, and it tends to change over time as the market matures.

2.) In financial services, the best consumer products tend to anchor on convenience.

The majority of consumers don’t want to spend any more time than they absolutely have to managing their money, so the most convenient product — the one that saves consumers the most time and minimizes the required cognitive load — wins.

3.) By contrast, the best financial products for businesses tend to anchor on capability.

Unlike consumers, the central purpose of a business is to make as much money as possible. Thus, financial products that provide businesses with the most robust set of capabilities for managing their cash flows, growing their revenues, and increasing their profits tend to win.

4.) What’s interesting, though, is that young customers — both consumers and businesses — value financial products very differently than their older cohorts.

While most consumers are pressed for time and thus value convenience above all else, younger consumers have ample free time and are much more willing to experiment with lots of different products in order to find the ones with the best individual capabilities (picture a 20-year-old with 15+ fintech apps on their phone).

On the other hand, while most businesses value capability in their financial services stacks, small businesses and startups don’t have the luxury to obsess over it. They have very limited time and resources, and thus, they tend to value the convenience of a more bundled solution far more than their larger, more established competitors.

5.) As The Innovator’s Dilemma predicts, incumbents in financial services focus on serving the most profitable segments of the market (i.e., older consumers and mid-size and enterprise businesses), while the market disruptors leveraging innovative technologies focus on the underserved segments (i.e., younger consumers and small businesses and startups) before attempting to move upmarket.

What I like about my theory is that it explains why our modern era of fintech innovation, which was born out of the internet’s disruptive impact on financial services, has played out differently for consumers and businesses.

The consumer story is the one we all know by heart.

B2C fintech providers initially got traction by unbundling legacy financial services offerings by building superior individual products that younger consumers would proactively seek out. As they’ve grown, these providers have traded capability for convenience, rebundling their products into solutions that will appeal to older and more time-constrained customers.

The business story is the one you don’t hear about as much, but if you dig into the evolution of B2B fintech and fintech infrastructure over the last ten years (and especially the last year or so), you will see a mirror image of the consumer fintech market’s trajectory.

Providers started by abstracting away the complexities of helping startup CEOs and small business owners run their companies by offering a bundle of different products and services through a single, convenient platform. However, we’re starting to see these providers make changes to their platforms — embracing modularity and open architectures — to court larger enterprise buyers, which have a much stronger focus on best-of-breed capabilities.

This trend towards unbundling and modularity for enterprise business buyers in fintech is newer. We’re just starting to see signs of it in the market, which makes sense given that B2B fintech and fintech infrastructure are newer fields than B2C fintech, which is already well into its second act.

And, as with seemingly everything in financial services, when a new trend emerges, you can expect to see it manifest in payments first.

Stripe

At Stripe’s annual developer conference Stripe Sessions this year, Stripe’s President of Product and Business Will Gaybrick got up on stage and said this:

We’ve long believed that global commerce and money movement is a team sport. And you all work at some of the most sophisticated companies in the world. You’ve been asking for years to make Stripe more modular and interoperable so that our services can fit into your existing technology stack. Over the past few years, we’ve quietly made several of Stripe’s services work better with other PSPs [payment service providers].

Today, we’re taking this one step further. We’re extending our modularity to the very core of Stripe — payments processing. We’re launching our Vault and Forward API so you can use even more of Stripe with other payment processors.

Three of Stripe’s most widely used services — the Optimized Checkout Suite, Billing, and Radar — will work with other PSPs [by the end of the year]. And going forward, as a general architectural principle, all of Stripe’s products will gracefully interoperate with third-party processors.

This is a big deal. In the future, I think we may look back on this as the moment when the modern fintech infrastructure market moved into its second act — the era of unbundling.

It’s undoubtedly a significant change for Stripe, which has always maintained that its strategy is to serve both small businesses and large enterprises equally. Indeed, Stripe’s CEO Patrick Collison reiterated that exact point at this year’s Stripe Sessions:

One of the less-understood parts of our strategy is that we care equally about both ends of the continuum. Both the world’s most innovative new businesses and also the world’s most established businesses.

The challenge, of course, in serving both ends of the market is that the strategies for profitably dominating each end are different, and occasionally in conflict with each other.

In an excellent essay from a few years ago, Packy McCormick pointed out the obvious benefit that product bundling had for Stripe in retaining its payments processing customers who, absent other incentives, might be inclined to shop around for a cheaper PSP:

Switching costs increase as companies use more Stripe products. If my corporate card is with Stripe and I take loans from Stripe Capital, is it worth lowering my spending limit and losing access to next-day loans to save 10 bps in fees? For many companies, it’s generally a better financial decision to stick with Stripe.

The answer to Packy’s question — If my corporate card is with Stripe and I take loans from Stripe Capital, is it worth lowering my spending limit and losing access to next-day loans to save 10 bps in fees? — depends on the answer to another question:

What volume of payments are you processing?

At a sufficient volume (like the volume that Stripe’s enterprise customers are processing), the answer is most definitely yes.

Thus, Stripe’s decision to decouple payments processing from the rest of its software stack.

In pursuit of growth within the enterprise segment, Stripe is purposefully weakening the value of its product bundle, which has served as a very effective competitive moat within the small business and startup market segment where Stripe grew up.

This brings to mind two big-picture questions:

- Why do enterprise customers in financial services value modularity so highly?

- What is the best way for larger companies to assemble and orchestrate a more modular financial services stack?

Let’s tackle each of these questions in turn.

Why is Modularity Valuable?

The answer to this question is reasonably straightforward — financial services is a mission-critical area of operations.

If a company can’t process payments or stop fraud or accurately reconcile its accounts, those failures can become existential risks to the business. Modularity gives companies the flexibility to minimize those risks along a number of different dimensions, including:

- Redundancy — Reliance on a single provider for a critical activity like payment processing exposes companies to significant losses in the event of an outage, which is a possibility that last year’s Square outage reminded us is very much in play, even when dealing with market leaders.

- Coverage — Large enterprise companies have customers, suppliers, and employees all over the world. Unfortunately, while commerce is global, payment systems are primarily local, which makes the ability to integrate multiple PSPs to cover different geographies a necessity.

- Price — Most financial products are commodities. This is especially true in payments processing. Maintaining the flexibility to pit different commoditized providers against each other ensures that companies with sufficient leverage are always getting the best possible price.

- Capability — My fintech friend and podcast co-host Simon Taylor tweeted a while back that, “Nearly every new Fintech company I see is unbundling some Stripe product.” This is a great observation and a helpful reminder of just how much competition Stripe has helped to usher into the market. And while it may not be good for Stripe, it is undoubtedly beneficial for Stripe’s enterprise customers to retain the flexibility to pick and choose between these different best-of-breed options.

While payments is the easiest area within the modern fintech infrastructure market to observe this burgeoning focus on modularity, it’s not difficult to look at other areas and see similar needs:

- BaaS — The failure of Synapse, which positioned itself as a bundled, all-in-one platform for launching financial products (deposit accounts, credit cards, crypto accounts, etc.), provides a stark reminder of the importance of redundancy and avoiding critical points of failure.

- Data Aggregation — As I wrote about recently, there is significant value to companies in not being tied to the uptime and coverage of a single data aggregator (which, even for the market leaders, is still less than you’d ideally want), but rather working through a third-party super aggregator or in-house aggregation abstraction layer, as large B2C fintech providers like Chime and Cash App do.

- Identity Management — The pressure to constantly seek out the best-of-breed providers for ID verification and authentication is always extremely high, thanks to the relentless and continually evolving onslaught of fraud in financial services. This is why identity management and fraud prevention are some of the only sectors within the fintech infrastructure market that have had significant success selling to large banks.

What’s the Best Way to Enable Modularity?

This one is not so straightforward.

If you work at a large or rapidly scaling company, and you are interested in decoupling the components of your financial services stack and embracing a more modular and composable approach, where do you start? Which parts of the stack do you focus on? Which infrastructure providers do you trust to help you?

It’s tricky.

In enterprise sales, you rarely get honest answers. If the prevailing sentiment in the market is that modularity is good (and that does seem to be the world we are headed into), every provider will tell you that interoperability is one of their core architectural principles.

But saying it doesn’t always make it so.

Again, we can use Stripe as our example.

Jareau Wade, who publishes a wonderful newsletter called Batch Processing, wrote a helpful article explaining how Stripe’s new Vault and Forward API (which is the company’s solution for enabling multiprocessor payment processing for its customers) works.

One of Jareau’s most interesting observations is that Stripe’s Vault and Forward API, as it works today, has some important limitations. For example, while the API can be used to forward along payment transaction requests to third-party PSPs, it is much harder for those PSPs to pass back data or requests for post-transaction actions:

According to Gaybrick, Billing and Radar, Stripe’s subscription billing and fraud tools, respectively, “will work seamlessly with other PSPs by the end of the year.” But those services—like the Optimized Checkout Suite, which is compatible with the Vault and Forward API today—are mostly focused on the experience before and during a transaction.

[An] example of Stripe’s multiprocessor support lacking the polish of Stripe’s more tightly integrated suite of tools comes after the transaction occurs. Ops and risk teams cannot manage refunds and disputes entirely from Stripe in a multiprocessor setup like they can using Stripe Payments. From Stripe’s docs again:

“If you’re using the Vault and the Forward API to make an authorization request, you must handle any post-transaction actions, such as refunds or disputes, directly with the third-party processor.”

And while Jareau explained in a follow-up article how Stripe is looking to address this deficiency through the use of a “reverse API” that would allow transactions processed on other PSPs via Stripe to be fully normalized in Stripe’s dashboard, API logs, and ledger, there’s still a catch — the third-party PSPs (or their merchant customers) would have to build to Stripe’s API specification, rather than Stripe building to theirs.

Why is Stripe taking this approach?

My guess is that it is primarily motivated by competitive concerns.

Stripe is happy to let smaller regional PSPs integrate into its multiprocessor APIs, but it’s not going to go out of its way to enable the same type of magical, end-to-end “everything just works seamlessly” experience for the large global PSPs like Adyen and Braintree (which also have no inherent interest in playing nicely with each other or Stripe).

Jareau seems to agree:

During Stripe Sessions, Gaybrick mentioned that “over the past few years we’ve quietly made several of Stripe services work better with other PSPs.” One could claim this is a strategic masterstroke by Stripe as it attempts to leave the world of payments behind, focusing instead on higher-value software products. Or maybe Stripe was forced to implement a basic form of payment orchestration to attract and retain important enterprise customers. I don’t know for sure, but if I had to guess, the reason these integrations were completed quietly is because Stripe begrudgingly did them. In fact, the Vault and Forward API’s list of supported endpoints reads like a list of Stripe’s main competition for enterprise-grade customers [Adyen, Braintree, Checkout, GMO Payment Gateway, PaymentsOS (PayU), and Worldpay]

This is understandable from Stripe’s perspective, but it illustrates the subtle challenge for enterprise companies looking to assemble and maintain a modular and composable financial services stack — the details of how modularity is enabled matter tremendously.

This brings us to vaulting and tokenization.

Come for the descoped liability. Stay for the orchestration.

The goal is to assemble and maintain a financial services stack that enables maximum flexibility.

The challenge, as we’ve just outlined, is that while integrated ecosystem providers like Stripe and Apple will occasionally make small moves towards modularity when customers or regulators demand it (Apple recently announced that it would be opening up access to its NFC chip to third-party developers), they will never do more than they have to. It is simply not in their best interests to go too far down that road (nor is it in keeping with their product development philosophies).

As a result, companies that benefit from closed ecosystems will usually do everything in their power to keep their ecosystems closed. One of the ways this manifests in financial services is the fierceness with which ecosystem providers protect their proprietary data.

Data, in financial services, is the key to unlocking modularity. With the right data elements, companies can orchestrate countless critical workflows, from payment initiation to consumer-permissioned data sharing to loan underwriting.

This is why, to use just one example, banks are so hostile towards open banking. They understand that making their account and transaction data more portable makes it easier for their customers to move their deposits, loans, and payments across different providers.

However, while financial services data is very valuable from an orchestration perspective, it’s also extremely sensitive. Processing or storing sensitive data such as credit and debit card numbers, bank account numbers, usernames and passwords, social security numbers, or photos of driver’s licenses, even briefly, exposes companies to significant compliance requirements and legal liability.

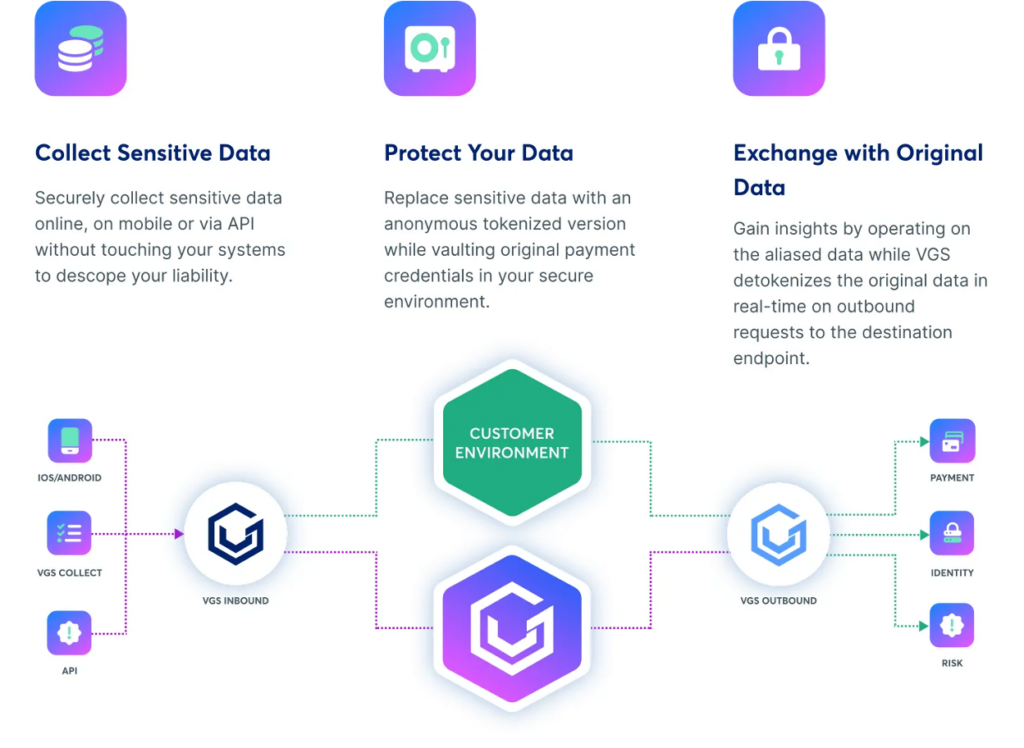

This is why vaulting and tokenization were created.

Tokenization is the process of transforming sensitive data into a unique, non-sensitive string of symbols called a token. This allows businesses to securely collect sensitive data without ever actually touching it (thus descoping their liability) and generate tokens representing that data for storage and use within internal systems and with third parties.

Here’s a graphic from VGS to help you visualize how the process works:

Because the primary motivation for tokenization is descoping the risk and liability of handling sensitive information, tokenization services are used up and down the financial services stack. Here’s just a sampling of the different types of tokens that are commonly used in payments:

- PSP Tokens — Allows merchants to offload the collection and storage of payment card data (which is governed by a very strict and onerous framework called the PCI Data Security Standards) to their PSP, which collects the data and generates tokens for the merchants to use.

- Issuer Tokens — Generated by card issuers or issuer processors to descope PCI compliance and enable specific use cases.

- Network Tokens — Payment card tokens generated at a network level (i.e., Visa, MasterCard, American Express, etc.) for specific merchants interacting with specific card accounts and offering extra security features (like cryptograms for individual authorizations).

- Tokenized Account Numbers — The equivalent of payment card tokens, but for bank account and routing numbers. TANs are generated by banks or their core system providers.

- Device Tokens — A token representing a specific payment card account tied to a specific device (like a smartphone). Commonly issued by Apple and Google for use in their respective mobile wallets.

(Editor’s Note – While payments use cases are the most popular examples of tokenization in use today, it’s easy to see how the technology could be applied, more broadly, to almost any area of fintech infrastructure that deals with sensitive data, including data aggregation, identity management, and even BaaS.)

With so many different options, a question that comes up frequently in payments is, “Where should I store my tokens?”

This is a more important question than the companies asking it may fully appreciate.

This is because tokens — which, again, are simply stand-ins for sensitive, real-world data elements like card or account numbers — can be used to orchestrate a wide variety of financial services workflows. Network tokens can be used to enable recurring transactions like subscriptions. Tokenized account numbers can be used to facilitate pay-by-bank transactions. Issuer tokens can be leveraged to generate virtual cards or provision mobile wallets.

Thus, tokens represent both a path to modularity (if they can be used to orchestrate workflows across different providers) or to ecosystem lock-in (if they are provided or stored by an ecosystem provider that is incentivized to limit customers’ choices).

And this brings us to token vaults.

A token vault is a secure system for storing and managing digital tokens. It is what is depicted in the center of this diagram from VGS:

Token vaults are designed to provide a layer of security and control by allowing companies to use sensitive data collected from customers without ever storing it within their own walls.

However, the usefulness of token vaults can vary quite a bit, depending on who is offering them and how they are designed. If they are offered by an ecosystem provider that is not committed to modularity, then the vault may not support the level of interoperability that enterprise companies expect. This is the root of Jareau Wade’s critique of Stripe’s new token vault and associated APIs — they don’t (yet) enable full interoperability between different PSPs.

This is why token vaults have, over the last 12-18 months, become one of the most consequential decision points in financial services infrastructure. You’re not just selecting a place to store sensitive customer data. You are also selecting a data routing and orchestration framework that can unlock a more modular, best-of-breed approach to payment processing, card issuing, data aggregation, banking-as-a-service, and digital identity management.

In the era of unbundled fintech infrastructure, that’s not a decision to be taken lightly.

About Sponsored Deep Dives

Sponsored Deep Dives are essays sponsored by a very-carefully-curated list of companies (selected by me), in which I write about topics of mutual interest to me, the sponsoring company, and (most importantly) you, the audience. If you have any questions or feedback on these sponsored deep dives, please DM me on Twitter or LinkedIn.

Today’s Sponsored Deep Dive was brought to you by VGS.

VGS is the world’s leader in payment tokenization. VGS is a payments infrastructure company, with more than 3 billion stored tokens globally. The world’s most recognizable merchants, banks and fintechs embed VGS into their technology stack to enhance payments performance and efficiency across processors, networks, PSPs and third party providers. We solve payments challenges for card acceptance and issuance, orchestration, PCI compliance, PII protection, loyalty, open banking and more.

VGS works with customers on every major continent — including AWS, Albertsons, Rappi, Zilch, and Sumup. The company now stores more than 70 percent of credentials in the United States and initiates more than 30B data calls per year. Learn more at vgs.io and by following them on LinkedIn.